Low-Light Image Enhancement

This section presents a concise overview of low light image enhancement techniques and their significance in the context of autonomous driving.

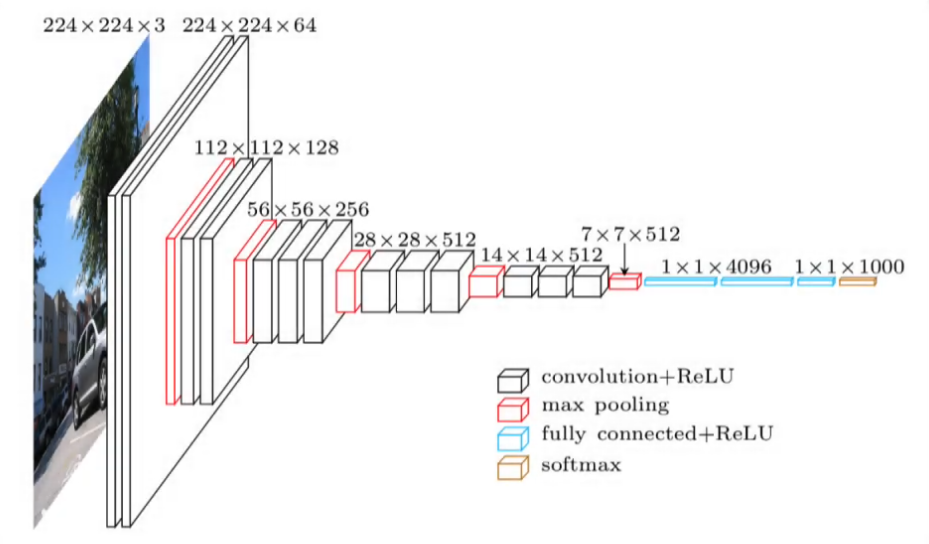

The problem of Low-Light Image Enhancement involves improving the lighting levels in dark images. To solve this problem, it is possible to apply convolutional neural networks (CNNs) with supervised learning, meaning that a corrected reference image (“ground truth”) is needed. Along with this image and the image obtained by the model, the loss is calculated, which will be minimized and fed into the ‘backpropagation’ algorithm. In addition to methods that use supervised learning, there are others that use unsupervised learning, meaning that in this case, no reference image is required, and therefore the loss calculation is done differently.

It may happen that in some cases the image obtained by the model contains artifacts, color distortions or noise. Therefore, structures or blocks are needed within the network that allow this type of defects to be eliminated. To evaluate the performance of the algorithm we are using, various indicators or metrics are used, such as the Peak-Signal-to-Noise Ratio (PSNR), which is related to the inverse of the loss, or the Structural-Similarity-Index (SSIM). The PSNR is measured in decibels (dB) and the SSIM is always a number between 0 and 1. In addition, it tells us how good the image is based on its quality for the human eye (visual perception). Normally, a good PSNR value does not necessarily have to be related to a better SSIM value (close to 1).

In the image we can see an example of the dark and clear image. The reference image is the original image at the top and is the one used to obtain the loss as we have already mentioned above.

![]()

![Rendered by QuickLaTeX.com \[ PSNR = 20*log\left(\frac{GT}{\sqrt Loss}\right) \]](https://cidaut.ai/wp-content/ql-cache/quicklatex.com-ab245f418b3ca13301ed5b81d319aa7a_l3.png)

Where Y is the degraded image and X is the reference image, f is the convolutional network. PSNR is obtained by dividing the ground truth image (GT) by the squared root of loss and then by applying the decimal logarithm and multiplying the result for 20. Finally, the results are shown in the last figure comparing the image obtained by the model with the dark initial image.