An important task for autonomous driving is detecting and correctly classifying traffic signs in order to make decisions. Furthermore, it is important to know what the sign says when it contains numbers or letters. This problem is aggravated when driving during night, since vision is greatly reduced.

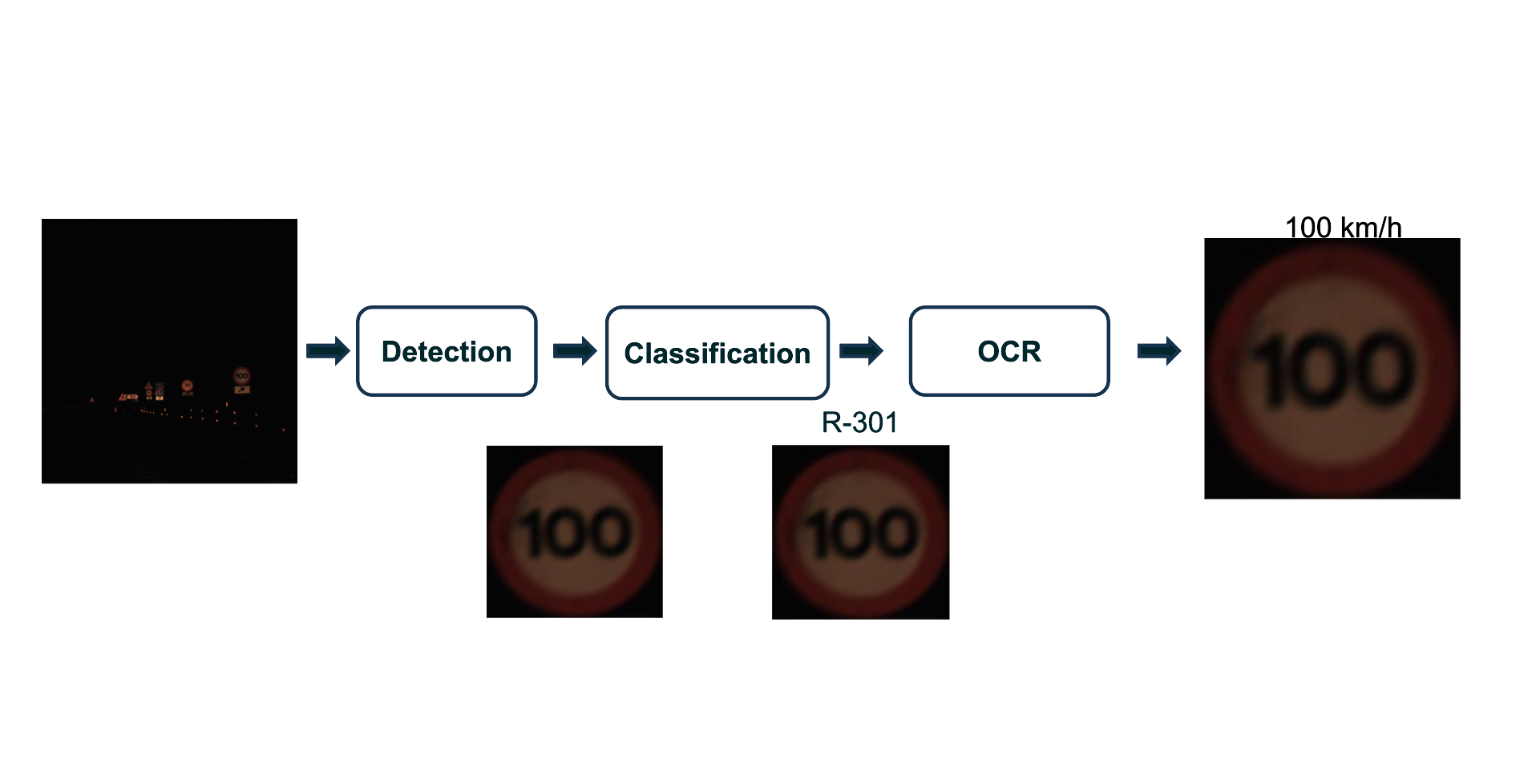

This problem could be solved with an enormous model capable to detect, classify and read the signs in one step. Nonetheless, we decided to divide this task in three main parts: Detection, Classification, and Reading. We developed a model for each of these issues and combined them.

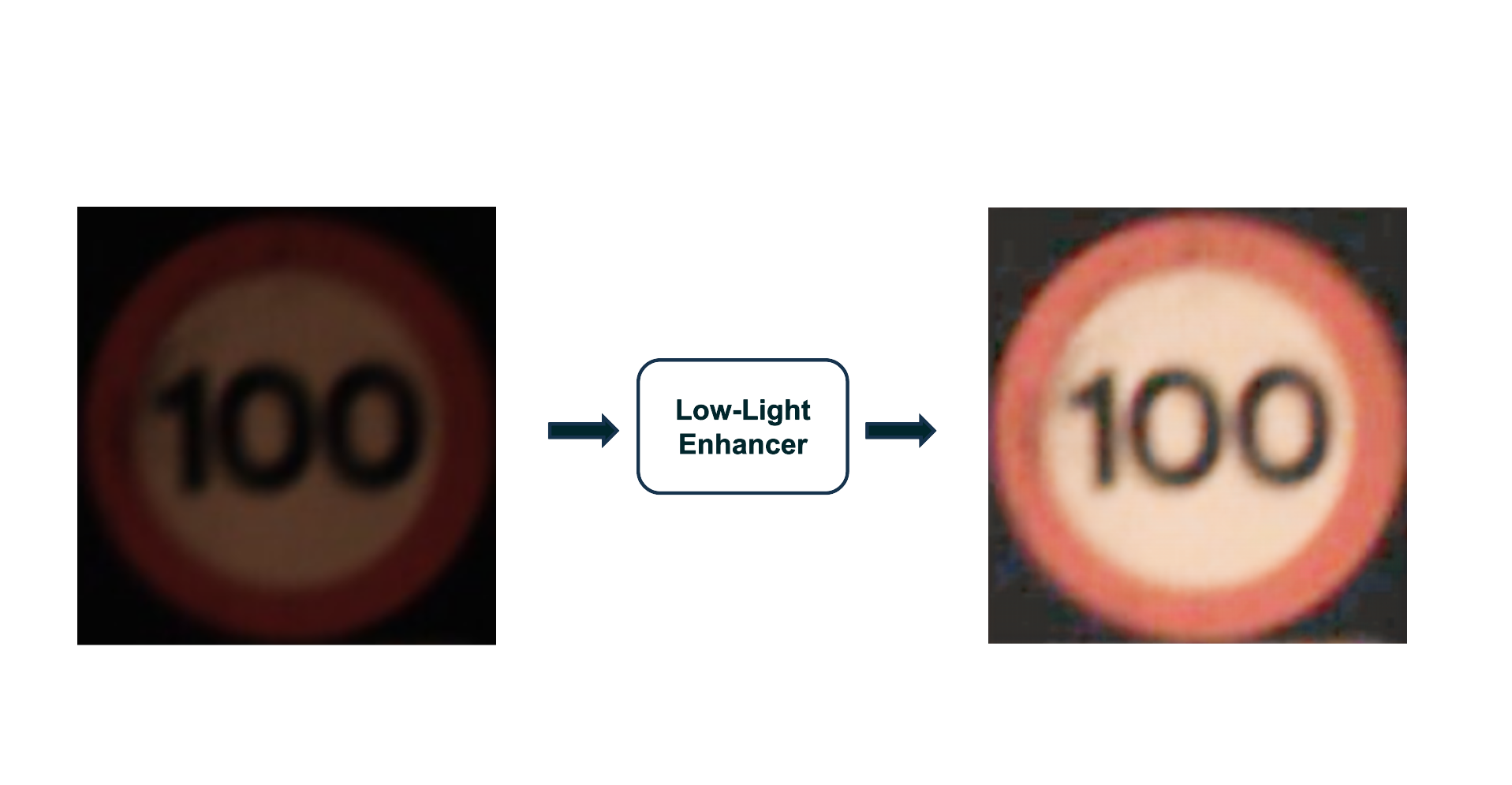

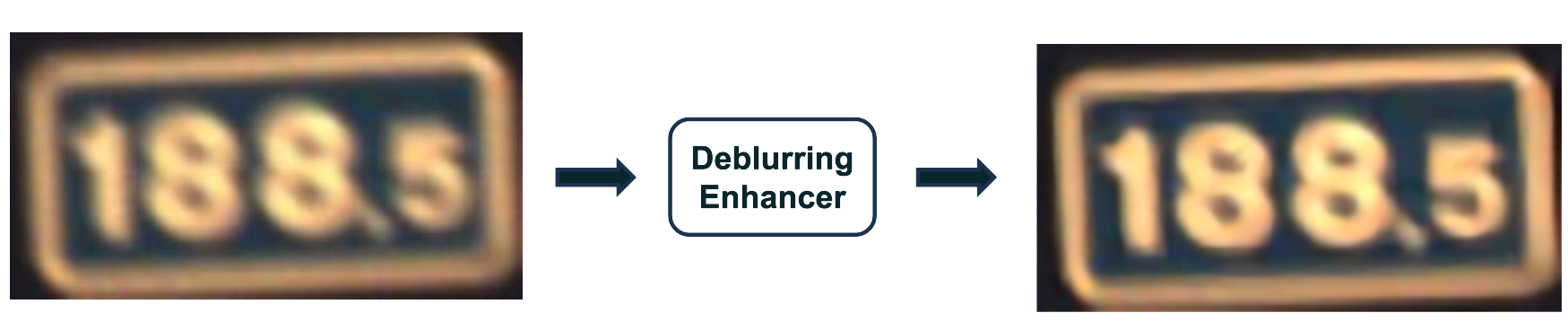

To improve detection and character recognition tasks, we could apply our restoration enhancement models.

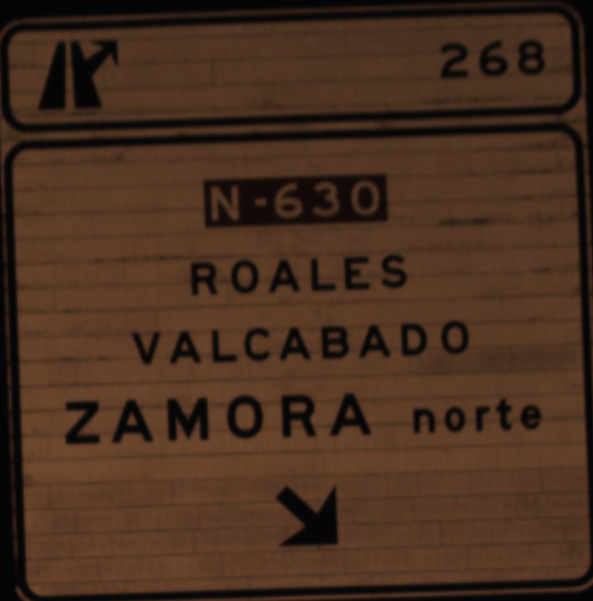

First of all, we take the full image and detect the sign using YOLOv10. With the predicted bounding boxes that YOLOv10 creates, we crop the image keeping only the detected sign. The model is trained to only detect one sign in each image.

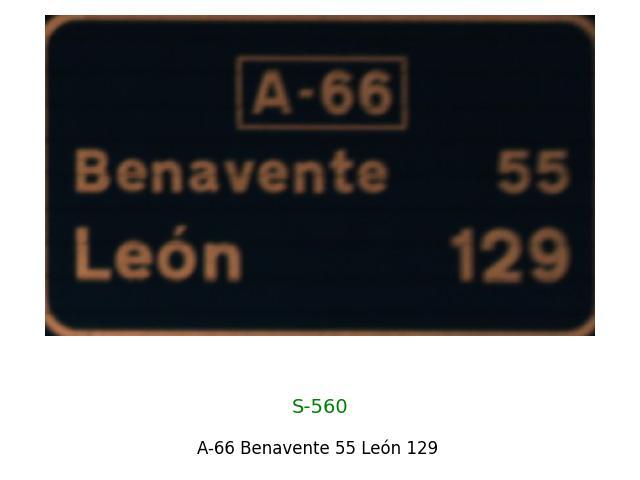

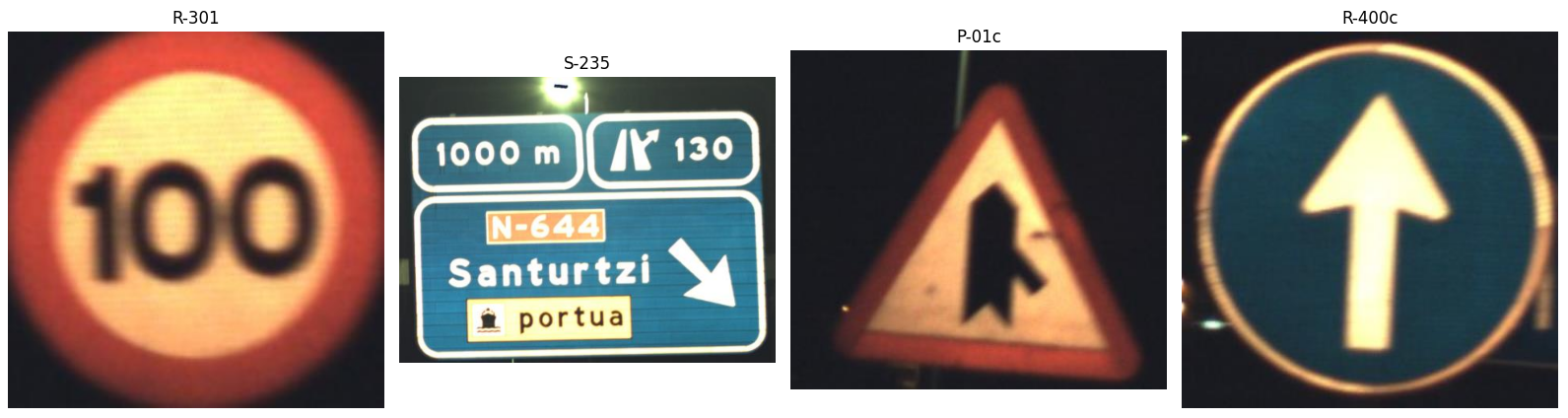

This crop is the input for the classification model, which will categorize the traffic signs according to a unique code given by the Spanish Ministry of Transport. The result of this classifier is the image below. You can check the veracity of each code in this link: Vertical Traffic Signs

With the above classification we know if the traffic sign contains any numbers of letters, which leads us to the next model: OCR. Optical Character Recognition (OCR) models have been studied for years now, and there are many available models that can obtained accurate results. We did a brief research and finally used EasyOCR to return the text written in traffic signs.

Before using EasyOCR to read the classified sign, we created a filter that writes ‘None’ if the sign has no letters or numbers (such a give way sign or a warning one) or writes ‘Couldn’t be resolved’ if the sign cannot be properly read. If the sign is clear, then the EasyOCR writes the content of it.

Therefore, the result is the cropped sign image, with its classification and reading.