Gesture Recognition to Support Driving

Designing and Evaluating a Deep Learning-Powered Interface for Contactless Driver-Vehicle Interaction. The solution reduces distraction by replacing physical inputs with intuitive hand gestures, combining real-time processing with robust performance evaluation.

The impact of Artificial Intelligence (AI) in the field of autonomous driving has been substantial in recent years. As a result, there are numerous applications of these techniques to related problems, including gesture recognition, which we will discuss in this post. In addition to this task, many others are also based on computer vision in this field, such as the generation of synthetic scenarios used for training and validating detection or trajectory prediction models.

Gesture recognition involves detecting a specific gesture, which may not necessarily be performed with the hands, and associating it with a certain action of the system. However, we will focus on the recognition of gestures performed by the hands. The objectives of the project are, on one hand, precisely the predefined definition of gestures and associating them with certain actions that the vehicle might perform. For instance, starting, stopping, or simpler actions like turning up the radio volume or rolling down the windows. On the other hand, we also implemented a feature to allow automatic control of the cursor with hand movement.

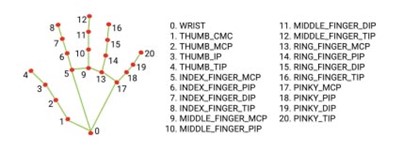

The predefined gestures used for gesture recognition were established using the coordinates provided by the ‘mediapipe’ library, which allows us to set and identify the finger positions corresponding to the gestures we selected within the program. The selected gestures are as follows:

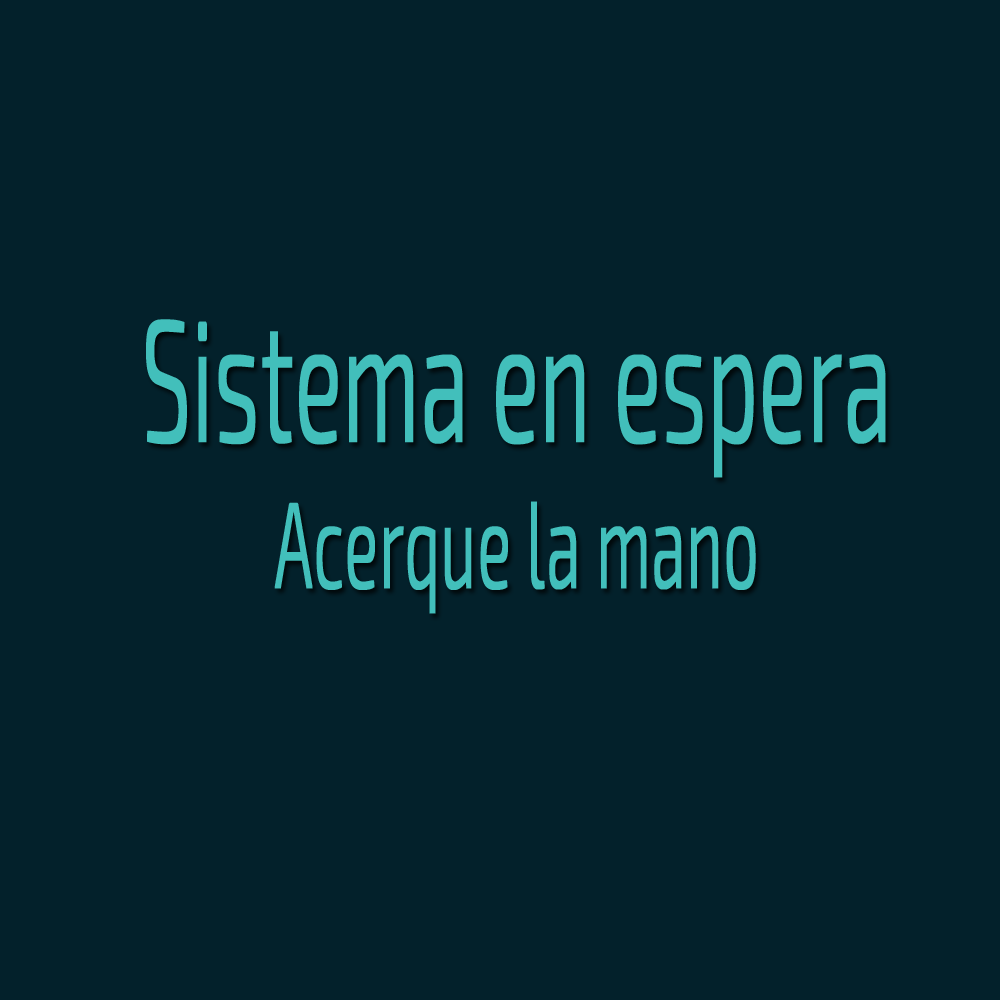

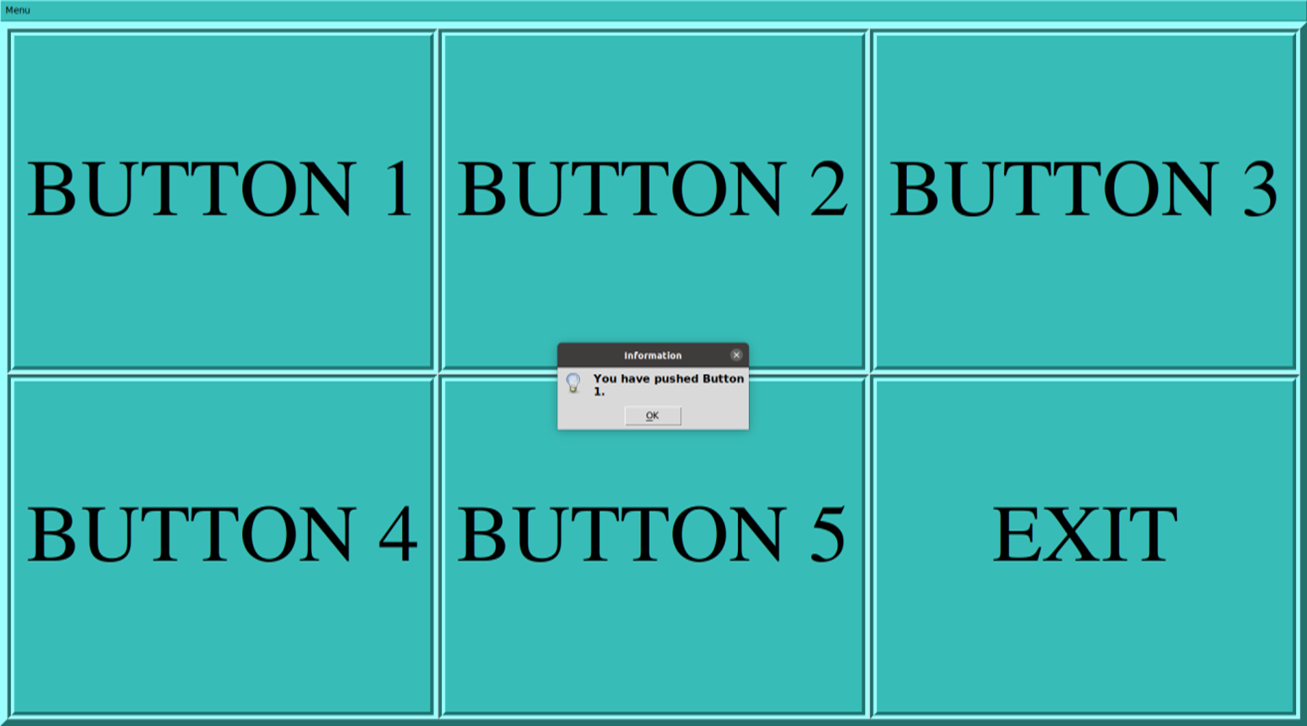

The program starts with a home screen where a main menu appears in the upper left corner of the screen. If we click on it, a tab opens, revealing the two features of our program: gesture recognition and automatic cursor control. In the first case, the program requires at least one hand to be detected to begin recognition; until then, the system remains in a standby phase. Next, once the hand is detected, the system indicates that it is waiting for us to perform one of the predefined gestures. Once the gesture is made, the program will not recognize a new one, even if we change the position of the hand, for a time we have set in the program. This is for safety reasons when executing actions that could be contradictory or dangerous at some point and lead to a possible accident.

The program starts with a home screen where a main menu appears in the upper left corner of the screen. If we click on it, a tab opens, revealing the two features of our program: gesture recognition and automatic cursor control. In the first case, the program requires at least one hand to be detected to begin recognition; until then, the system remains in a standby phase. Next, once the hand is detected, the system indicates that it is waiting for us to perform one of the predefined gestures. Once the gesture is made, the program will not recognize a new one, even if we change the position of the hand, for a time we have set in the program. This is for safety reasons when executing actions that could be contradictory or dangerous at some point and lead to a possible accident.

Finally, once we have tested the functionality of our program, it will be necessary to return to the screen where the main menu is located, open this menu, and click on the ‘Log out’ option to close the application completely. The ‘Reset’ option is useful in case a problem occurs during the execution of the program in either of the two features. For example, if there is a system failure or crash, it is possible to return to the main screen by clicking this option.

With all this, we have met the required objectives for gesture recognition and automatic cursor control in the menu with the help of the ‘mouse’ library for cursor control and ‘tkinter’ to generate the program’s complete graphical interface, in addition to the aforementioned ‘mediapipe’ library.